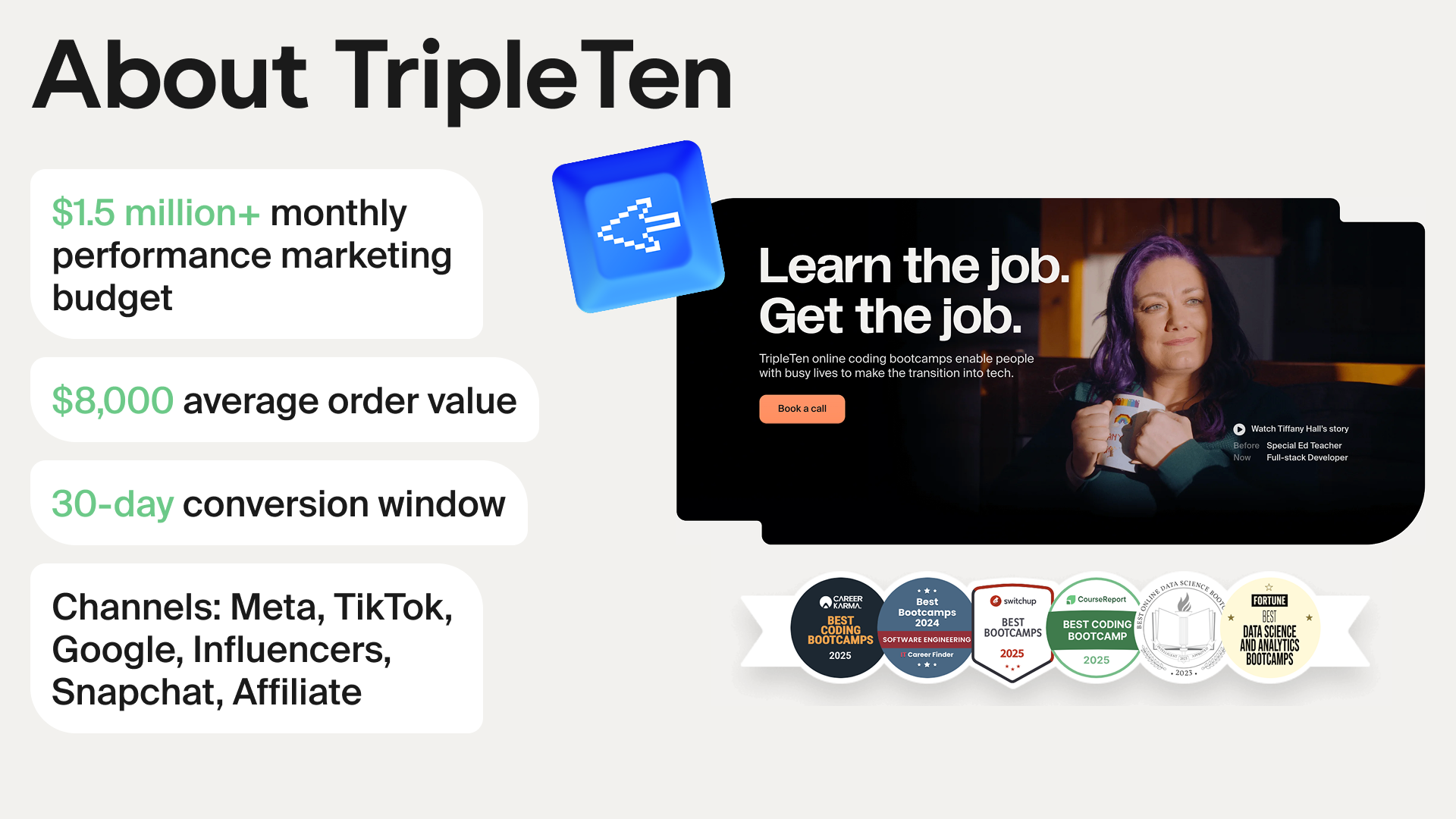

At TripleTen, an online coding bootcamp that helps people launch their career in tech, user acquisition is a key growth driver.

Аs we scaled, however, we ran into an algorithmic limitation: ad platform algorithms didn’t have enough conversion events to optimize effectively. This limitation pushed our customer acquisition cost (CAC) above benchmarks and limited campaign efficiency. To solve it, we turned to predictive AI — a shift that transformed our optimization strategy and cut CAC by nearly 50%.

In performance marketing, there are generally two approaches to campaign optimization. The first is post-analysis: breaking down results by campaign, audience, and creative, and making adjustments based on the findings. Despite limitations, this method remains surprisingly popular.. The second way is auto-optimization: relying on the built-in algorithms of Google, Meta, and other platforms to do most of the heavy lifting.

None of these methods work in isolation, they need to operate in tandem to remain effective. Below, I’ll walk through each of them — and explain why, in our case, neither was enough.

Post-campaign analysis

Post-analysis is foundational for us. If you set the wrong principles or misconfigure automation early on, the entire campaign is doomed from the start — and no amount of analysis can save it later.

We rely on a few key tools during this stage.

1. Monitoring performance across each channel on three levels

Campaign level.

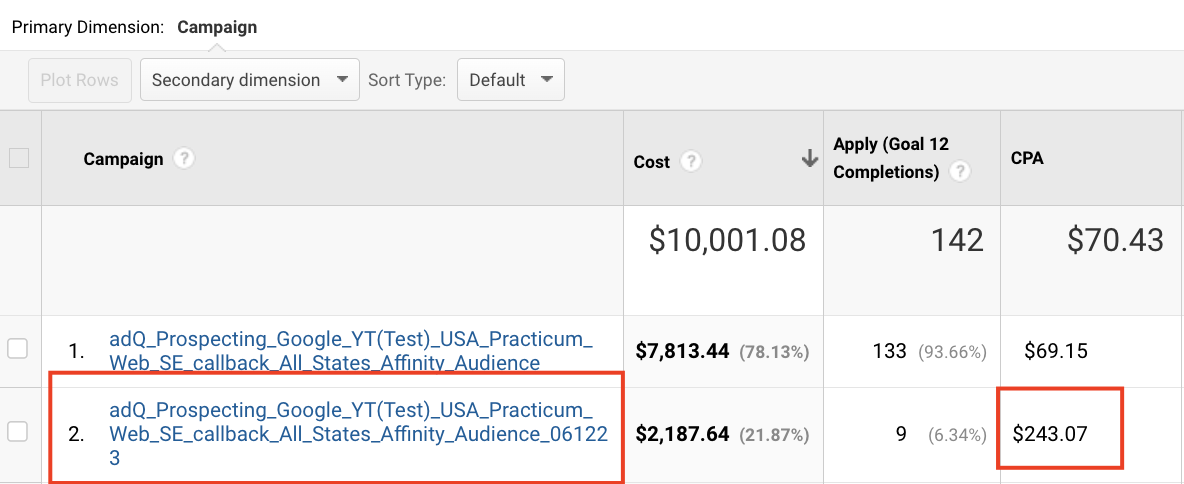

Suppose we’re running two similar campaigns. If CAC in the first is noticeably lower than in the second, we’ll reallocate the budget toward the stronger performer to maximize results. It’s quick and straightforward. But it’s equally important to go deeper — even inside an underperforming campaign, you’ll often find segments worth scaling.

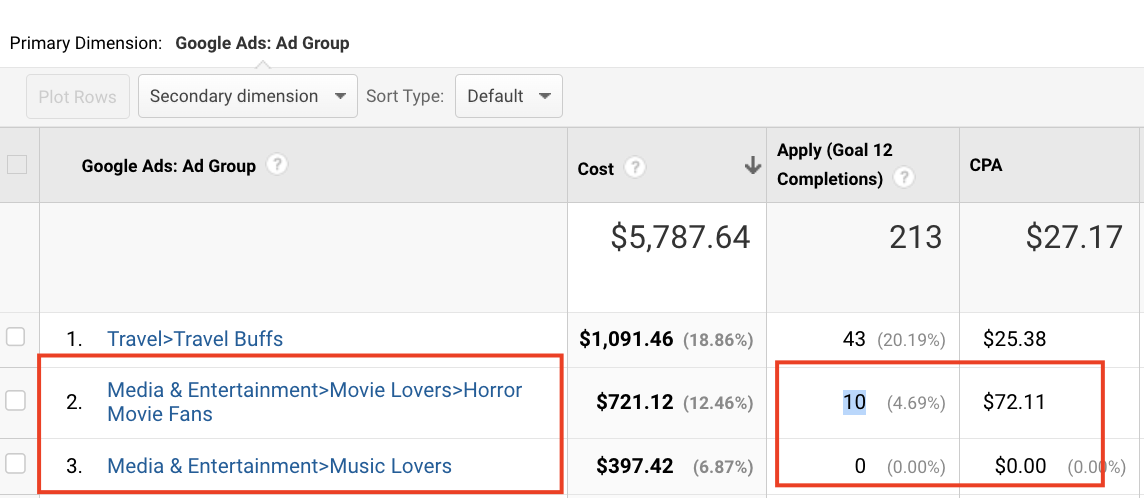

Audience level.

We evaluate CAC across different audience segments — for example, travelers, media enthusiasts, or music lovers. In some segments, CAC can be several times higher than average. By pausing those audiences or lowering bids, we improve overall performance and scale more efficiently.

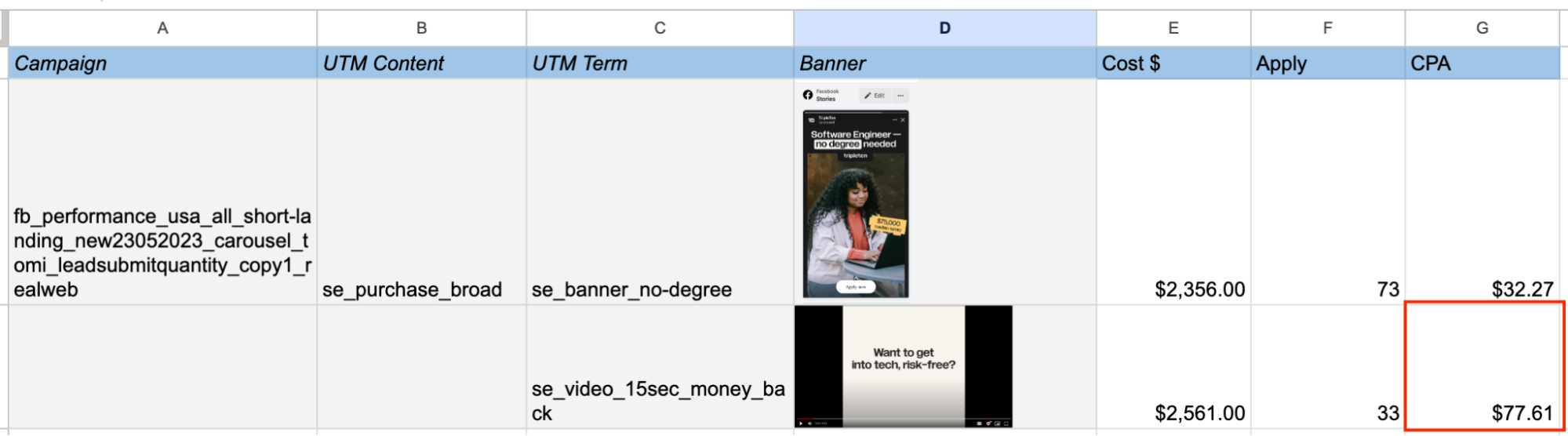

Creative level.

In performance marketing, creatives directly shape outcomes. CTR impacts CPC, and CPC impacts CAC. Our job is to pause creatives with inflated acquisition costs while constantly testing new ones to keep performance fresh.

2. Adjusting bids and budgets

When launching campaigns, we always set a target CPA. Adjusting bids can significantly improve performance — but with a catch: if the bid is too low, ads may not deliver at all. That’s why testing bids and daily budgets is non-negotiable.

3. Limit placements and keywords to top performers

We regularly trim irrelevant keywords, add negative keywords, and cut interest segments that don’t deliver. This helps us quickly zero in on what works — and stop wasting budget.

Unlike marketers, ad platforms have near-infinite insight into user profiles — interests, attributes, signals. But that only works if the business sends conversion events back to the platforms.

Auto-optimization within ad platforms

The second approach we rely on is built-in auto-optimization. This is the backbone of most modern campaigns — platform algorithms handle much of the heavy lifting when it comes to audience selection and bidding.

Naturally, we asked ourselves: if we’re already feeding conversion data into the platforms, why not optimize for purchases? After all, that’s our ultimate business goal.

Here’s how it works in practice:

- We launch a campaign.

- Users click through to the website.

- A portion of them not only visits but submits an application — an event directly tied to a purchase.

We then send that application event back to the ad platform. The platform attributes it to the specific click, ad, and audience that drove it. From there, its algorithm finds similar users and shows ads to people more likely to convert.

In other words, the platform optimizes for conversions and gradually brings CAC down.

When launching a campaign, we define two parameters:

- The event that matters to the business. For us initially, that was purchases.

- The target cost per acquisition.

From there, the system takes over — using its algorithm to find users most likely to convert into program enrollments at or below our target CPA.

Criteria for choosing optimization events

- Frequency: at least 50 events per week.

- Speed: the event should occur quickly — ideally within the same session or a couple of days — to stay within attribution windows.

- Correlation with payment: users who trigger the event should have a high probability of becoming paying customers.

When standard strategies fell short

After several weeks of testing, we still weren’t hitting our KPIs. CAC remained too high. A deeper analysis revealed the issue wasn’t the channels or even the algorithms — it was the events we were feeding into them.

We realized that in our business, optimizing for purchases simply wasn’t feasible.

- Purchase events were too infrequent. Algorithms need ~50 conversions per week per campaign to exit learning mode — we never hit that threshold.

- Purchase events took too long to occur. The average delay between application and payment in our business is ~30 days. That pushed us outside attribution windows, making it nearly impossible for platforms to attribute conversions accurately.

Even with correct setup, the platforms just didn’t have enough timely data to optimize effectively.

The core issue: “Purchase” was the wrong optimization event — too rare and too delayed. As a result, CAC climbed and performance became unstable.

The solution wasn’t to change channels or budgets — it was to shift the optimization signal. We needed to feed the algorithms better, earlier data, so we turned to predictive events.

The turning point: integrating Tomi.ai

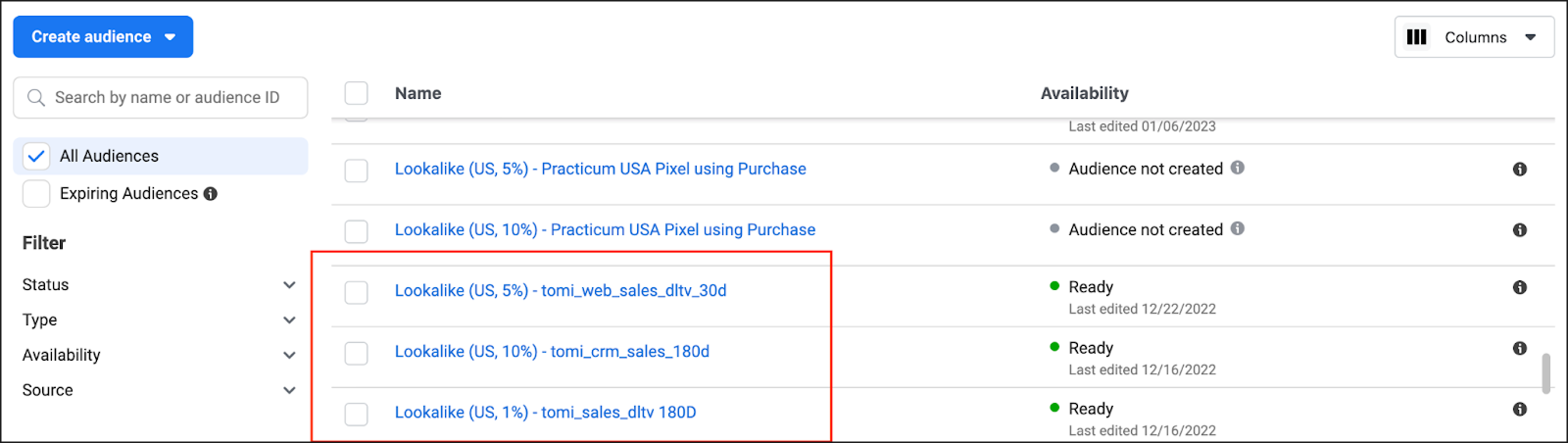

To break out of this trap, we moved away from purchase-based optimization and adopted a lookalike-driven approach. The goal was to send platforms stronger signals — based on users who submitted applications and closely resembled our actual paying customers..

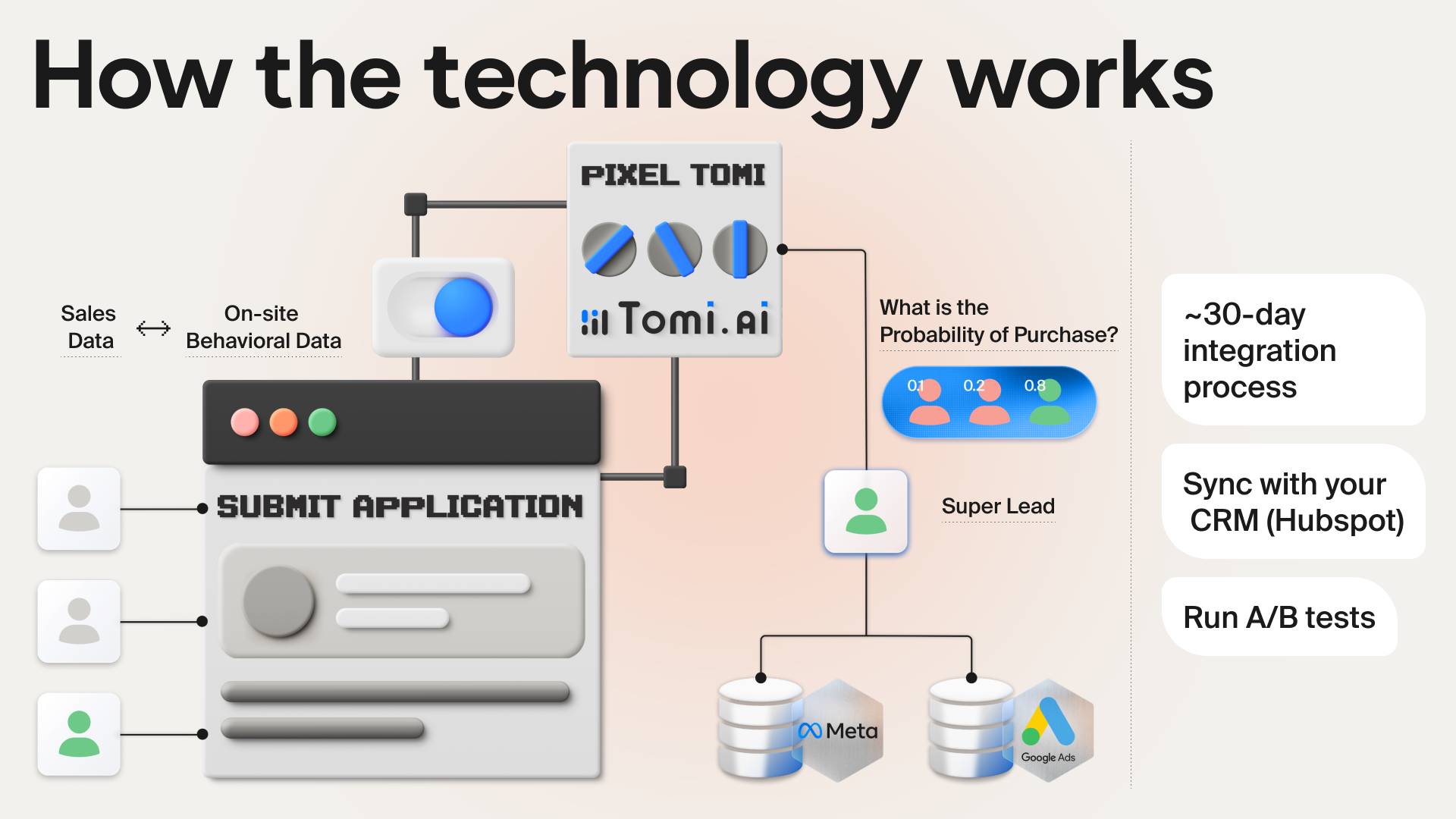

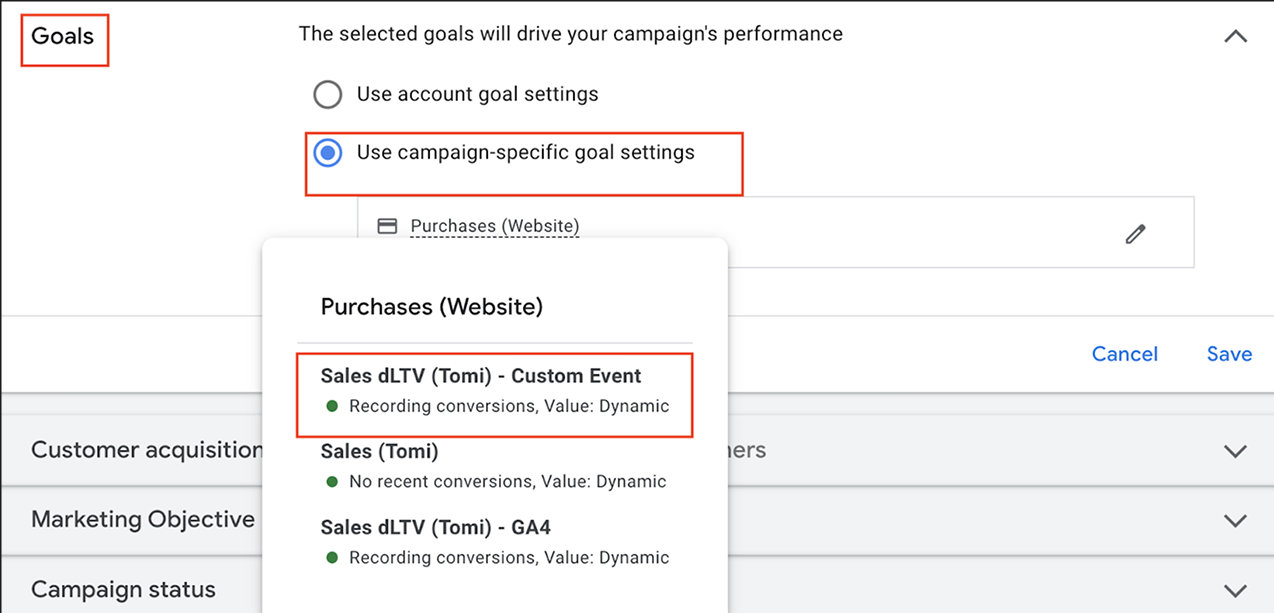

We selected Tomi.ai because its predictive models consistently identified high-intent users early in the funnel and pushed those events directly into Google and Meta in real time. This allowed us to feed high-quality predictive events into the algorithms and train automated strategies on users who behaved like future buyers — not just applicants.

Here’s how it works with Tomi.ai:

- We launch campaigns.

- Users land on the site and submit applications.

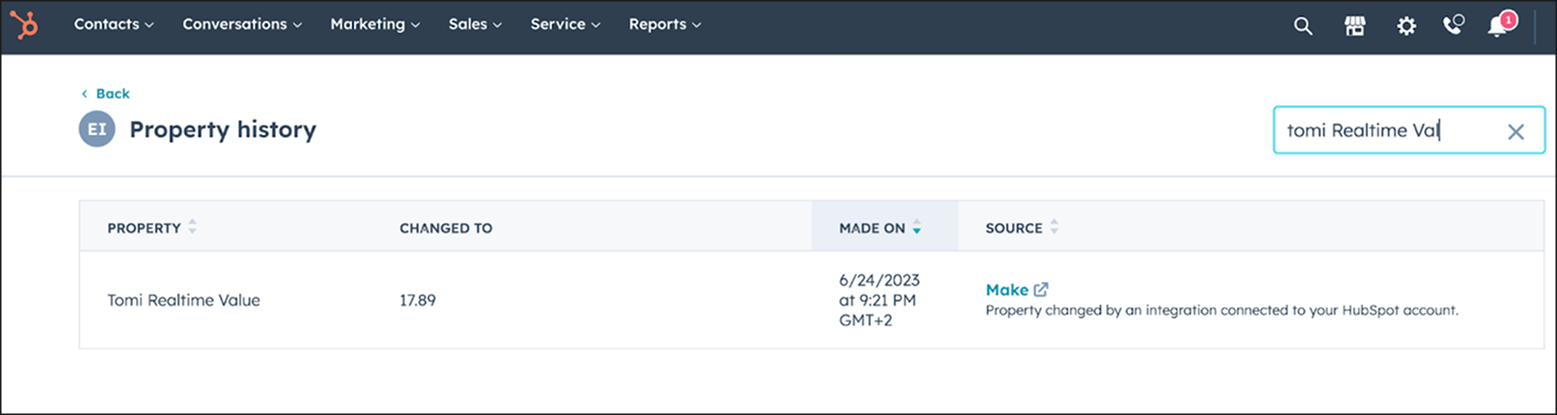

- Tomi.ai evaluates each user’s likelihood to become a paying customer by comparing them to historical converters using two data sources: CRM sales outcomes and onsite behavioral signals.

- Each user receives a predictive score from 0 to 1. A score of 0 indicates very low purchase probability, while 1 indicates very high purchase probability. We set our threshold at 0.8.

- Users with a score above 0.8 are classified as Super Leads. We send the Super Lead event back to Google Ads and Meta as our primary optimization event.

A word of caution: setting the threshold too high can backfire. You need a balance — events must still be frequent enough (50+ per week) to keep campaigns out of learning mode while still being strongly predictive of purchases.

The results

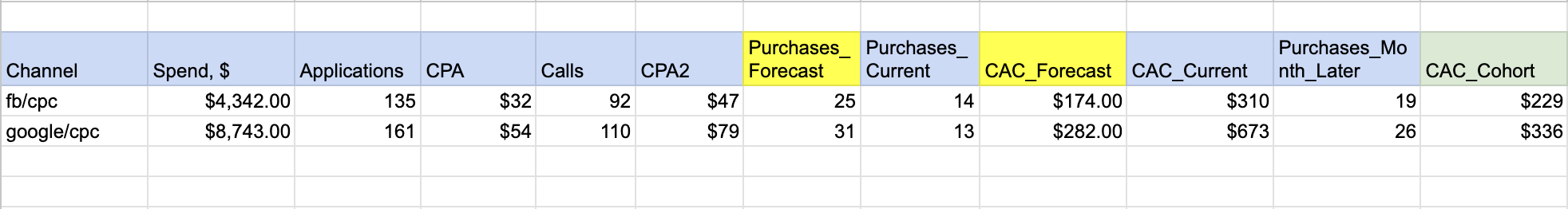

To validate the hypothesis, we ran a one-month A/B test with identical audience sizes, budgets, and campaign settings.

Campaign A: optimized for regular lead submissions.

Campaign B: optimized for Super Leads.

The results were clear:

- Conversion-to-purchase rate was 2x higher in Campaign B with Tomi.ai’s predictive Super Lead events.

- Cost per lead (CPL) remained nearly the same.

- CAC dropped significantly, because Super Leads converted to paying students at a much higher rate.

Even across broad audiences, optimizing on Super Leads ensured that ads reached users who closely resembled our best-fit customers.

Beyond CAC: the additional value of Tomi.ai

What surprised us most was how many additional benefits we unlocked beyond lowering CAC. Three stand out:

1. Lead prioritization in CRM. We now score and prioritize incoming leads by their likelihood to purchase, enabling our sales team to focus on the highest-intent prospects first.

2. Retargeting and lookalike audiences. Super Leads became the foundation for building high-quality lookalike segments. Platforms started finding users who closely resembled our paying students, helping us scale without sacrificing lead quality — even in retargeting flows.

3. Predictive revenue and CAC forecasting. With Tomi.ai’s scoring, we can forecast how many leads will convert into paying students and what CAC will be. For a business with a 30-day sales cycle, this is a game-changer: instead of waiting for a month for final conversion data, we can evaluate campaign performance within days.

In our case, Tomi.ai’s prediction accuracy exceeded 90% — enabling us to move from gut instinct to truly data-driven ones.

Key insights

- Not all conversions are created equal. Standard events like “Lead” or “Purchase” aren’t always the best optimization signals.

- Mid-funnel events matter. The right optimization signal is frequent enough for the algorithm to learn and strongly correlated with real business outcomes.

- AI is a partner, not a replacement. Automated bidding works only when we feed platforms the right data.

Implementing predictive scoring changed more than just our metrics — it transformed our entire performance strategy.. Instead of manually patching campaigns, we began designing signals that guide algorithms toward what really drives business results.